-

☐ Update videos for the first figure

Replace the teaser video with updated demonstration materials -

☐ Add more blocks for interactive 3D point cloud visualization

Expand the qualitative visualization section with additional 3D model viewers and examples; add virtual spheres.

Through the Perspective of LiDAR: A Feature-Enriched and Uncertainty-Aware Annotation Pipeline for Terrestrial Point Cloud Segmentation

Note: This page is currently being updated with new content. (Oct-14-2025)

Abstract

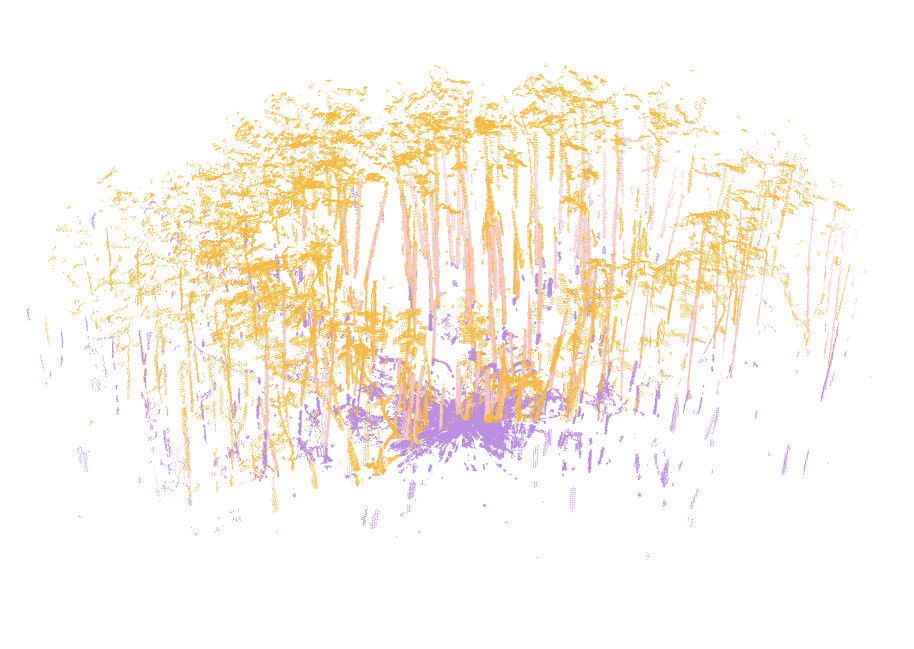

Accurate semantic segmentation of terrestrial laser scanning (TLS) point clouds is limited by costly manual annotation. We propose a semi-automated, uncertainty-aware pipeline that integrates spherical projection, feature enrichment, ensemble learning, and targeted annotation to reduce labeling effort while sustaining high accuracy. Our approach projects 3D points to a 2D spherical grid, enriches pixels with multi-source features, and trains an ensemble of segmentation networks to produce pseudo-labels and uncertainty maps, the latter guiding annotation of ambiguous regions. The 2D outputs are back-projected to 3D, yielding densely annotated point clouds supported by a three-tier visualization suite (2D feature maps, 3D colorized point clouds, and compact virtual spheres) for rapid triage and reviewer guidance. Using this pipeline, we built Mangrove3D, a semantic segmentation TLS dataset for mangrove forests. We further evaluated data efficiency and feature importance to address two key questions: (1) how much annotated data is needed, and (2) which features matter most. Results show that performance saturates after $\sim$12 annotated scans, geometric features contribute the most, and compact nine-channel stacks capture nearly all discriminative power (mean Intersection over Union, mIoU plateau around 0.76). Finally, we confirm generalization of our feature-enrichment strategy through cross-dataset tests on ForestSemantic and Semantic3D. Our contributions include: (i) a robust, uncertainty-aware TLS annotation pipeline with visualization tools; (ii) the Mangrove3D dataset; and (iii) empirical guidance on data efficiency and feature importance, enabling scalable, high-quality segmentation of TLS point clouds for ecological monitoring and beyond.

Method

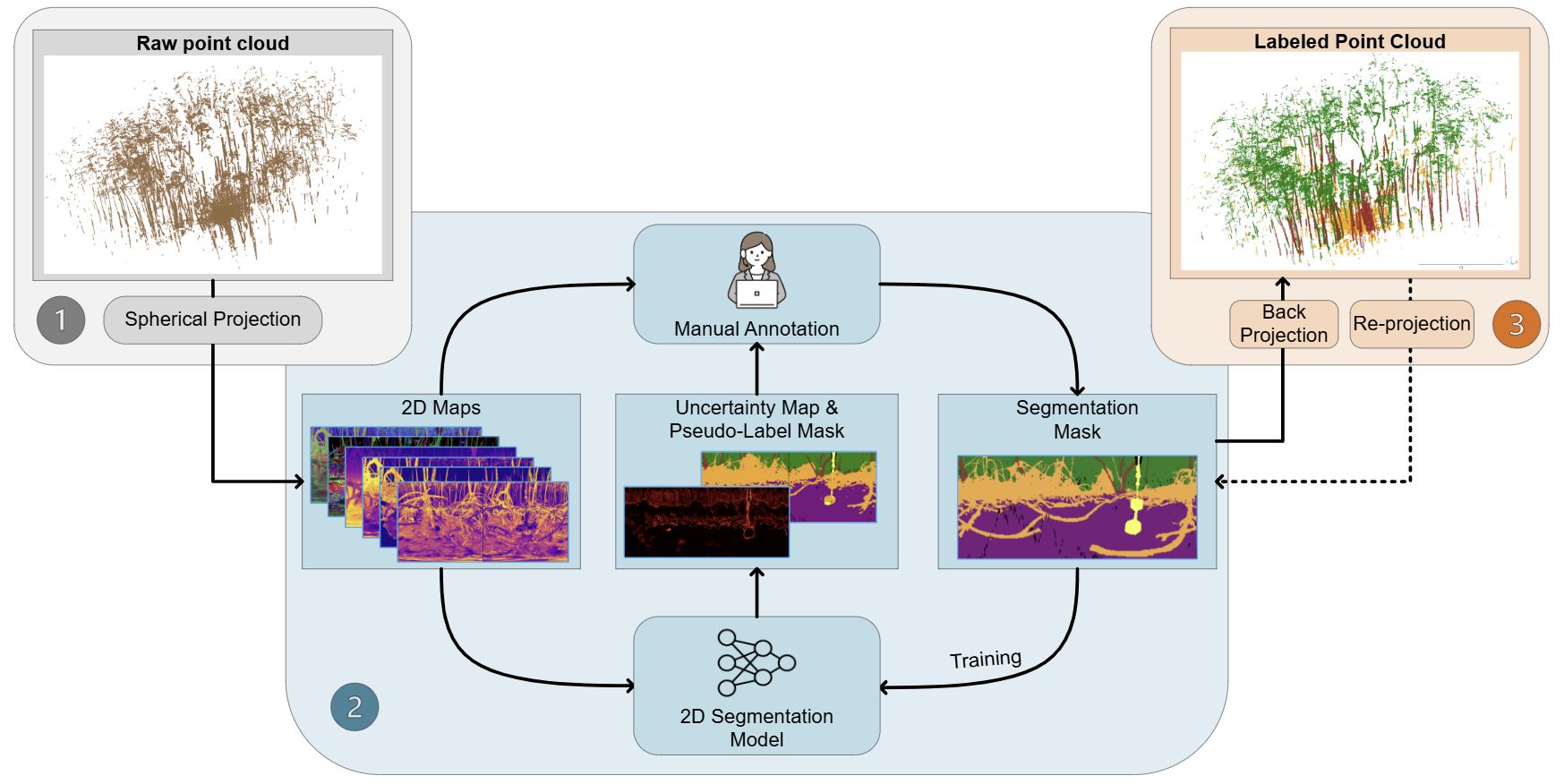

Three-stage workflow for annotating terrestrial-LiDAR scans. Stage 1: Spherical projection converts raw TLS points into two-dimensional feature maps and pseudo-RGB images. Stage 2: An iterative loop combines active learning and self-training: an emsemble DNN model is repeatedly refined using uncertainty-guided queries and high-confidence pseudo-labels. Stage 3: The resulting 2-D segmentation masks are back-projected, followed by label refinement in 3D space and then reproject back to 2D, to yield a fully annotated point cloud and refined 2D segmentation mask.

Qualitative Visualization

Mangrove3D Segmentation Results

BibTeX

@misc{zhang2025perspectivelidarfeatureenricheduncertaintyaware,

title={Through the Perspective of LiDAR: A Feature-Enriched and Uncertainty-Aware Annotation Pipeline for Terrestrial Point Cloud Segmentation},

author={Fei Zhang and Rob Chancia and Josie Clapp and Amirhossein Hassanzadeh and Dimah Dera and Richard MacKenzie and Jan van Aardt},

year={2025},

eprint={2510.06582},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2510.06582},

}

Acknowledgements

The authors are grateful to Dr. Nidhal Carla Bouaynaya and Dr. Bartosz Krawczyk for their valuable insights on uncertainty evaluation and semantic segmentation methods. We thank Mr. Brett Matzke for his assistance in setting up the computing resources. We also acknowledge RIT Research Computing for providing access to NVIDIA A100 computing resources.